Getting Started

Genesis Cloud Inference service allows users to interact with a variety of LLMs through a unified API interface.

The service is powered by our own GPU infrastructure ensuring high availability and performance.

OpenAI Compatible Endpoints

https://inference-api.genesiscloud.com/openai/v1

Available Models and Pricing

During the beta phase of the Inference Service, the available models and their pricing may constantly change. Use the /models endpoint to get the currently available models and pricing (no authentication required):

Authentication

Requests to the completions endpoints must be authenticated.

This is done using the Authorization HTTP header that must be provided with each request.

To generate an API token visit the Keys and Tokens section of the console dashboard. Make sure to select Inference Service when creating a new token.

Please Note: It is absolutely essential that you keep your secret token private as it provides access to everything in your Genesis Cloud account. If you accidentally publish your token, please immediately revoke it.

OpenAI Compatability

Our inference service is OpenAI compatible. This means you cannot only access the service

using REST, but also OpenAI libraries (JavaScript and Python).

We currently support the /models, /chat/completions and /completions endpoints with more to come.

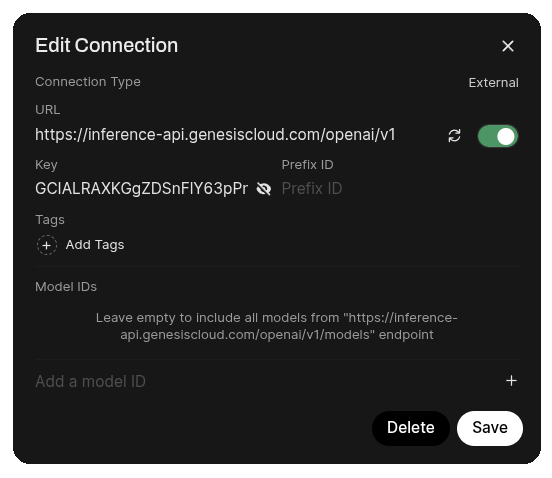

External Tools (UIs, code editors, etc.)

To configure external tools like open-webui, code editors, etc. simply supply our URL and your token. No further configuration is required.

JavaScript Example

import OpenAI from "openai";

const client = new OpenAI({

apiKey: "INFERENCE_API_TOKEN",

baseURL: "https://inference-api.genesiscloud.com/openai/v1",

});

const response = await client.chat.completions.create({

model: "deepseek-ai/DeepSeek-V3-0324",

messages: [

{

role: "user",

content: "Explain to me how AI works",

},

],

});

console.log(response.choices?.[0]?.message);

Python Example

from openai import OpenAI

client = OpenAI(

api_key="INFERENCE_API_TOKEN",

base_url="https://inference-api.genesiscloud.com/openai/v1"

)

response = client.chat.completions.create(

model="deepseek-ai/DeepSeek-V3-0324",

messages=[

{

"role": "user",

"content": "Explain to me how AI works"

}

]

)

print(response.choices[0].message)

REST Example

curl "https://inference-api.genesiscloud.com/openai/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $INFERENCE_API_TOKEN" \

-d '{

"model": "deepseek-ai/DeepSeek-V3-0324",

"messages": [

{

"role": "user",

"content": "Explain to me how AI works"

}

],

"stream": true,

"stream_options": {

"include_usage": true

}

}'